Had an escalation this week where a customer was moving from ESXi on AHV on self-built new clusters.

All built and tested (shifting from ESXi on 10GbE, to AHV on 25GbE and moving from leaf switches to TOR switches) – all OK in NCC and smoke tested fine.

But… problems occurred quickly when loaded up with some workloads migrated over using Move, the VMs and hosts started to experience strange network problems that didn’t make much sense from the outset especially on such a simple setup.

Upon initial inspection we were seeing a rapidly growing number of dropped RX packets on the physical links coming into each AHV host, but no reported errors on the switch side.

There was no obvious rhyme or reason on what was going on, the throughput was 2Gbps on a 25Gbps link, so clearly not the sheer amount of bandwidth being consumed.

Curiously we weren’t seeing any CRC errors or NCC check alerts on host_nic_error_check though, just these growing numbers of dropped RX packets so the initial layer1 problem of cabling or modules being at fault. We didn’t see any buffer exhaustion or other reasons to make us think this was a physical NIC problem.

AHV# ethtool -S <eth> | egrep “rx_errors|rx_crc_errors|rx_missed_errors”

We confirmed with the owner of the network that there was no storm control configuration in place on the switching, and that there were no reported broadcast storms or other typical problem logs. Seeing the same happen across multiple hosts, and on each of the pair of TOR switches, we had to move on.

A conversation with the service owners of the VMs having the most problems explained that their VMs were used in high frequency trading applications and relied upon a constant stream of send/receive using multicast across multiple multicast groups usually 20-30MB/s per VM. They reported that they were seeing a generally choppy service with data being slow and lost – generally a poor experience.

We theorised that as this issue appeared to be isolated to multicast traffic only, we should do some basic checks to make sure we’re sending and receiving multicast traffic properly, and we’re seeing group membership on the problematic VMs.

root# netstat -g (to show the VM is listening on Multicast)

On VM 1 – root# tcpdump -i eth0 host 224.5.3.2

On VM 2 – root# echo -n Hello | ncat -vu 224.5.3.2

We seen the multicast traffic coming in, so not that either.

We turned our attention to the theory that may be it wasn’t the case that it wasn’t simply not working, but rather it was being overwhelmed when it was busy which led us onto thinking about IGMP snooping.

Cloudflare has a great explanation of IGMP snooping – the gist being that without any config being in place, the Nutanix AHV host upon receiving multicast traffic will flood it to all connected virtual/tap ports as it has no way of knowing which VM/NIC really might want it and rather be safe than sorry. On a quiet platform this could potentially fly under the radar, whilst not terribly efficient it works.

In our case though, with multiple VMs, and multiple multicast groups on each of these VMs – sending and receiving we believed we may be seeing the impact of a default configuration working against us.

AHV supports the concept of setting up IGMP snooping, much the same as a physical switch can, to allow for more ‘targeted’ directing of multicast traffic – to just those VMs/NICs that want the traffic rather than everyone. (effectively, the evolution of a hub to a switch!)

cvm$ acli net.get_virtual_switch vs0

igmp_config {

enable_querier: False

enable_snooping: False

}

We enabled IGMP snooping with the config

cvm$ acli net.update_virtual_switch vs0 enable_igmp_snooping=true igmp_snooping_timeout=30

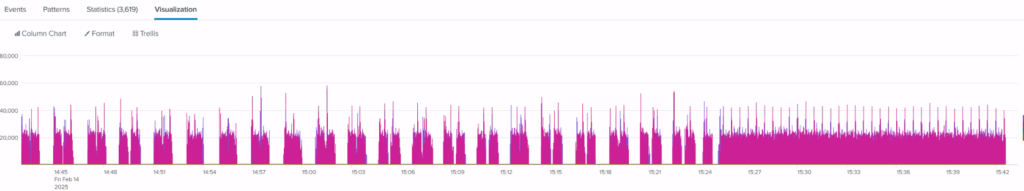

Within a couple of minutes the service owners reported a difference in experience, we were seeing periods of 30-40 seconds of perfect service, followed by a period of 30-40 seconds of total silence. This repeated over and over suggesting some kind of flapping back and forth.

We undid our change, and disabled IGMP snooping again, and the behaviour changed back to how it was before, working but at a poor level of performance – crucially without the gaps.

This led us to dig a little further on the network switch side, confirming the IGMP querier was in place, and that when looking at the physical interfaces facing the AHV hosts we could see them showing up as having IGMP group memberships being seen. There was then the smoking gun, the IGMP querier configuration was set to 125 seconds rather than the default of 60 seconds. A quick bit of math suggested that 125 (the IGMP querier config on the switch) – 30 (our IGMP snooping timeout) left us with a gap in time that could explain drops happening.

We re-ran the command using the default 300 seconds (which we thought was a long time!)

cvm$ acli net.update_virtual_switch vs0 enable_igmp_snooping=true igmp_snooping_timeout=300

Immediately the service owners confirmed performance was both consistent, and at the expected levels.

The splunk graph showed a wonderful difference … guess what time we made the change 😉

We went into the weekend, quietly optimistic that this was going to be our solution… time will tell!

There’s a Nutanix KB that talks through the configuration of IGMP snooping – https://portal.nutanix.com/page/documents/details?targetId=AHV-Admin-Guide-v10_0:ahv-cluster-nw-ebable-igmp-snooping-ahv-t.html

It is worth covering off that another thing we tried from a Nutanix SRE recommendation was “Enabling RSS Virtio-Net Multi-Queue by increasing the Number of VNIC Queues” as these VMs were 8vCPU and were using their NICs frequently. This was set initially at 1, then 2, then 4 with subtle improvements each time. This wasn’t going to scale having to configure it on every VM though.